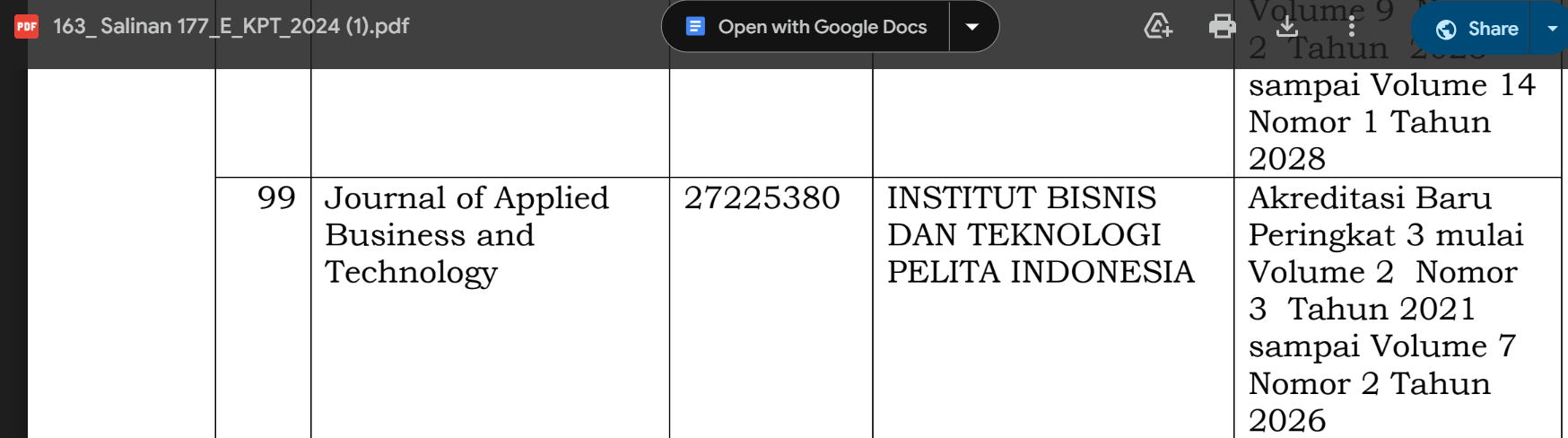

Increasing Trust in AI with Explainable Artificial Intelligence (XAI): A Literature Review

DOI:

https://doi.org/10.35145/jabt.v5i3.193Keywords:

XAI, Artificial Intelligence, Machine Learning, XAI Model, XAI ImplementationAbstract

Artificial Intelligence (AI) is one of the most versatile technologies ever to exist so far. Its application spans as wide as the mind can imagine: science, art, medicine, business, law, education, and more. Although very advanced, AI lacks one key aspect that makes its contribution to specific fields often limited, which is transparency. As it grows in complexity, the programming of AI is becoming too complex to comprehend, thus making its process a “black box” in which humans cannot trace how the result came about. This lack of transparency makes AI not auditable, unaccountable, and untrustworthy. With the development of XAI, AI can now play a more significant role in regulated and complex domains. For example, XAI improves risk assessment in?finance by making credit evaluation transparent. An essential application of?XAI is in medicine, where more clarity of decision-making increases reliability and accountability in diagnosis tools. Explainable Artificial Intelligence?(XAI) bridges this gap. It is?an approach that makes the process of AI algorithms comprehensible for people. Explainable Artificial Intelligence (XAI) is the bridge that closes this gap. It is a method that unveils the process behind AI algorithms comprehensibly to humans. This allows institutions to be more responsible in developing AI and for stakeholders to put more trust in AI. Owing to the development of XAI, the technology can now further its contributions in legally regulated and deeply profound fields.

References

Alkhalaf, S., Alturise, F., Bahaddad, A. A., Elnaim, B. M. E., Shabana, S., Abdel-Khalek, S., & Mansour, R. F. (2023). Adaptive Aquila Optimizer with Explainable Artificial Intelligence-Enabled Cancer Diagnosis on Medical Imaging. Cancers, 15(5), 1492. https://doi.org/10.3390/cancers15051492

Amoroso, N., Quarto, S., Rocca, M. L., Tangaro, S., Monaco, A., & Bellotti, R. (2023). An eXplainability Artificial Intelligence approach to brain connectivity in Alzheimer’s disease. Frontiers in Aging Neuroscience, 15. https://doi.org/10.3389/fnagi.2023.1238065

Bussmann, N., Giudici, P., Marinelli, D., & Papenbrock, J. (2020). Explainable AI in Fintech Risk Management. Frontiers in Artificial Intelligence, 3(26). https://doi.org/10.3389/frai.2020.00026

Brusa, E., Cibrario, L., Delprete, C., & Di Maggio, L. G. (2023). Explainable AI for Machine Fault Diagnosis: Understanding Features’ Contribution in Machine Learning Models for Industrial Condition Monitoring. Applied Sciences, 13(4), 2038. https://doi.org/10.3390/app13042038

Chandra, H., Pawar, P. M., R. Elakkiya, Tamizharasan, P. S., Raja Muthalagu, & Alavikunhu Panthakkan. (2023). Explainable AI for Soil Fertility Prediction. IEEE Access, 11, 97866–97878. https://doi.org/10.1109/access.2023.3311827

de Lange, P. E., Melsom, B., Vennerød, C. B., & Westgaard, S. (2022). Explainable AI for Credit Assessment in Banks. Journal of Risk and Financial Management, 15(12), 556. https://doi.org/10.3390/jrfm15120556

Fritz-Morgenthal, S., Hein, B., & Papenbrock, J. (2022). Financial Risk Management and Explainable, Trustworthy, Responsible AI. Frontiers in Artificial Intelligence, 5(1), 1-14. https://doi.org/10.3389/frai.2022.779799

Galić, I., Marija Habijan, Hrvoje Leventić, & Krešimir Romić. (2023). Machine Learning Empowering Personalized Medicine: A Comprehensive Review of Medical Image Analysis Methods. Electronics, 12(21), 4411. https://doi.org/10.3390/electronics12214411

Giudici, P., & Raffinetti, E. (2021). Explainable AI methods in cyber risk management. Quality and Reliability Engineering International, 38(3), 1318-1326. https://doi.org/10.1002/qre.2939

Gurmessa, D. K., & Jimma, W. (2023). A comprehensive evaluation of explainable Artificial Intelligence techniques in stroke diagnosis: A systematic review. Cogent Engineering, 10(2), 1-20. https://doi.org/10.1080/23311916.2023.2273088

Han, T. A., Pandit, D., S Joneidy, Hasan, M. M., Hossain, J., M Hoque Tania, Hossain, M. A., & N Nourmohammadi. (2023). An Explainable AI Tool for Operational Risks Evaluation of AI Systems for SMEs. In 2023 15th International Conference on Software, Knowledge, Information Management and Applications (SKIMA), 69-74. https://doi.org/10.1109/skima59232.2023.10387301

Hashem, H. A., Abdulazeem, Y., Labib, L. M., Elhosseini, M. A., & Shehata, M. (2023). An Integrated Machine Learning-Based Brain Computer Interface to Classify Diverse Limb Motor Tasks: Explainable Model. Sensors, 23(6), 3171. https://doi.org/10.3390/s23063171

Hu, X., Liu, A., Li, X., Dai, Y., & Nakao, M. (2023). Explainable AI for customer segmentation in product development. CIRP Annals, 72(1), 89-92. https://doi.org/10.1016/j.cirp.2023.03.004

Imanuel, J., Kintanswari, L., Vincent, Lucky, H., & Chowanda, A. (2022). Explainable Artificial Intelligence (XAI) on Hoax Detection Using Decision Tree C4.5 Method for Indonesian News Platform. 2022 International Conference of Science and Information Technology in Smart Administration (ICSINTESA), 63–68. https://doi.org/10.1109/icsintesa56431.2022.10041567

Javed, A. R., Ahmed, W., Pandya, S., Maddikunta, P. K. R., Alazab, M., & Gadekallu, T. R. (2023). A Survey of Explainable Artificial Intelligence for Smart Cities. Electronics, 12(4), 1020. https://doi.org/10.3390/electronics12041020

Joyce, D. W., Kormilitzin, A., Smith, K. A., & Cipriani, A. (2023). Explainable artificial intelligence for mental health through transparency and interpretability for understandability. Npj Digital Medicine, 6(1), 1-8. https://doi.org/10.1038/s41746-023-00751-9

Karim, R., Islam, T., Shajalal, Oya Beyan, Lange, C., Cochez, M., Dietrich Rebholz-Schuhmann, & Decker, S. (2023). Explainable AI for Bioinformatics: Methods, Tools and Applications. Briefings in Bioinformatics, 24(5), 1-22. https://doi.org/10.1093/bib/bbad236

Kiani, M. (2022). Explainable artificial intelligence for functional brain development analysis: methods and applications. University of Essex (United Kingdom). http://repository.essex.ac.uk/33114/%0AExplainable

Nagahisarchoghaei, M., Nur, N., Cummins, L., Nur, N., Karimi, M. M., Nandanwar, S., Bhattacharyya, S., & Rahimi, S. (2023). An Empirical Survey on Explainable AI Technologies: Recent Trends, Use-Cases, and Categories from Technical and Application Perspectives. Electronics (Basel), 12(5), 1-41. https://doi.org/10.3390/electronics12051092.

Pillay, J., Rahman, S., Klarenbach, S., Reynolds, D. L., Tessier, L. A., Guylène Thériault, Persaud, N., Finley, C., Leighl, N., Matthew, Garritty, C., Traversy, G., Tan, M., & Hartling, L. (2024). Screening for lung cancer with computed tomography: protocol for systematic reviews for the Canadian Task Force on Preventive Health Care. Systematic Reviews, 13(1), 1-18. https://doi.org/10.1186/s13643-024-02506-3

Qian, J., Li, H., Wang, J., & He, L. (2023). Recent Advances in Explainable Artificial Intelligence for Magnetic Resonance Imaging. Diagnostics, 13(9), 1571. https://doi.org/10.3390/diagnostics13091571

Siddiqui, K., & Doyle, T. E. (2022). Trust Metrics for Medical Deep Learning Using Explainable-AI Ensemble for Time Series Classification. In 2022 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), 370-377. https://doi.org/10.1109/ccece49351.2022.9918458

Taşcı, B. (2023). Attention Deep Feature Extraction from Brain MRIs in Explainable Mode: DGXAINet. Diagnostics, 13(5), 859. https://doi.org/10.3390/diagnostics13050859

Tian, Z., Cheng, Y., Zhao, S., Li, R., Zhou, J., Sun, Q., & Wang, D. (2024). Deep learning radiomics-based prediction model of metachronous distant metastasis following curative resection for retroperitoneal leiomyosarcoma: a bicentric study. Cancer Imaging, 24(1), 1-13. https://doi.org/10.1186/s40644-024-00697-5